Welcome Back Intel Xeon 6900P Reasserts Intel Server Leadership – ServeTheHome

The Intel Xeon 6900P offers twice the cores, huge memory bandwidth and more connectivity. Welcome back Intel to the top end server CPU market

Welcome back Intel! Intel Xeon has trailed AMD EPYC in P-core counts for around seven years. Five years ago, AMD pulled far ahead with the AMD EPYC 7002 “Rome” series and never looked back in terms of raw compute. Today marks the first time in about 86 months that Intel has a leadership server x86 CPU again. The Intel Xeon 6 with P-cores series, more aptly named the Intel Xeon 6900P series, brings 128 cores, 12 memory channels, accelerators, new process technology, and more to Intel Xeon.

Of course, there is a lot going on here, so let us get to it.

Video Version – Coming

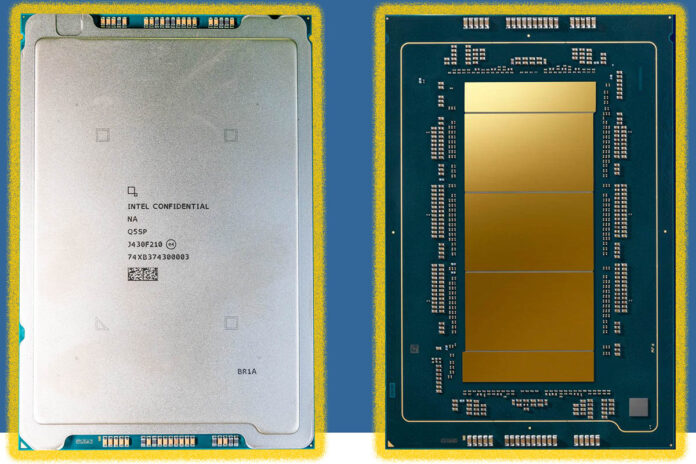

We had a very short amount of time to do this one. Last week, we were at Intel in Oregon learning about the new chips, but then we went to film what will be our biggest video of the year just after. Our pre-production “Granite Rapids-AP” system arrived, and we had the weekend to work on it, which was a challenge when some benchmarks took over a day to run through test scripts on the 512-thread system.

Still, Intel furnished us with a pre-production development system to use with its top-bin chips. We need to say this is sponsored by Intel. For some of the power figures we usually would want to publish on a release day piece like this, we are going to wait for an OEM system with more realistic fan curves. The Intel platform was rough around the edges. That is to say, we are going to have more on this story. We will also have a video, but it is going to go live a bit later today. When it is live, we will embed the video.

Let us get to it.

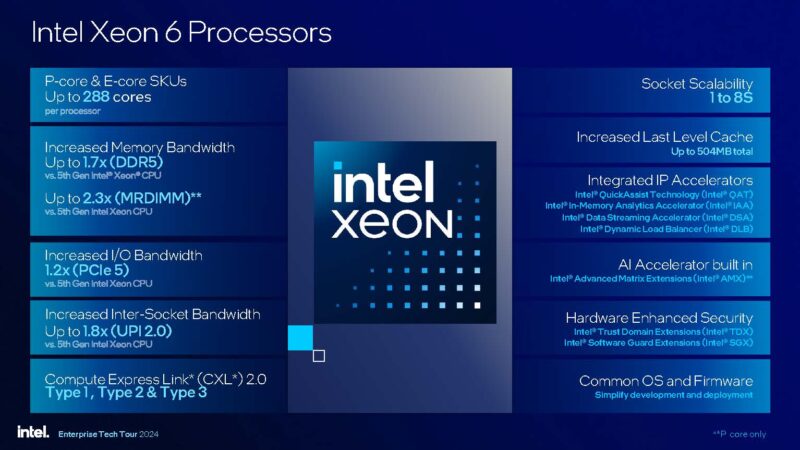

When a Xeon is Not Just a Xeon, but a XEON

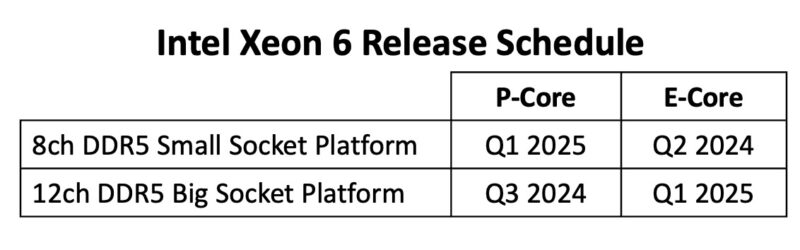

Starting here, it is essential to understand that Xeon 6 is like an ultimate slow roll-out. Today, we have the Intel Xeon 6900P series, the top-end part with 128 P-cores. A few months ago, we reviewed the Intel Xeon 6700E series “Sierra Forest,” which has 144 E-cores and uses a different socket and has half the TDP. Both are Intel Xeon 6, but they are very different. That leads to the Xeon 6 family covering a lot of ground, but not necessarily all in the same product.

For years, when we discussed a generation of Intel Xeon CPUs, it was the same socket and same core architecture, so long as we overlook abborations like the LGA1356 Sandy Bridge-EN and Ivy Bridge-EN. Today, we have effectively a 2×2 matrix of E-cores and P-cores. With today’s launch being the 12 channel P-core platform launch.

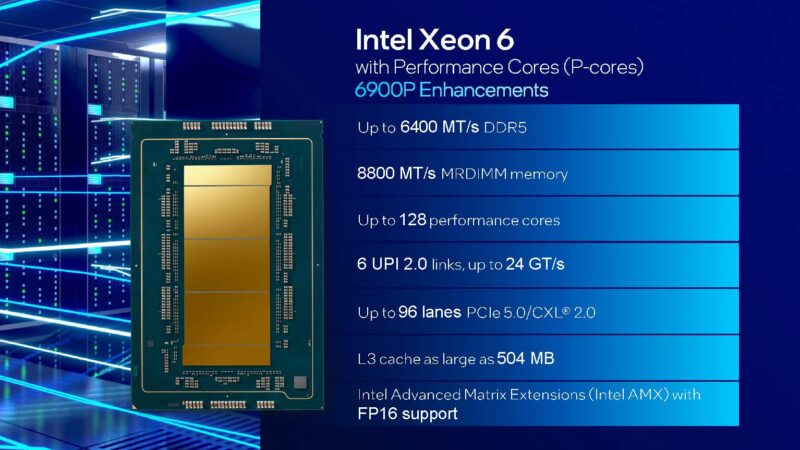

Important to note is that this is not the high-core count “Sierra Forest-AP” 288 core launch for scale-out cloud-native workloads. The Intel Xeon 6900P “Granite Rapids-AP” is Intel’s big iron dual socket Xeon for high-performance computing. We get 12-channels of DDR5-6400 or 8800MT/s MRDIMM/ MCR DIMM memory (more on this in a bit) so Intel can now match AMD’s memory channels, and exceed AMD’s memory bandwidth. 128 full P-cores is more than AMD currently offers (96 with Genoa since Bergamo is the lower cache cores.) There are 96 lanes of PCIe Gen5 per CPU for 192 lanes total, and there is CXL 2.0 support, all while enabling a full 6 UPI lanes for socket-to-socket bandwidth. L3 cache is no longer an “AMD has way more” on its mainstream parts (non Genoa-X) now that the Intel Xeon 6980P has 504MB of L3 cache.

While we focus a lot on the top-end SKUs, a lot of organizations buy midrange parts. That is something Intel will be rolling out in the future in its smaller socket designs. This is important as Intel will have modern parts for those who may want 32 cores per socket, but are not going to populate 12 memory channels and spend a lot on expensive motherboards that can handle larger sockets.

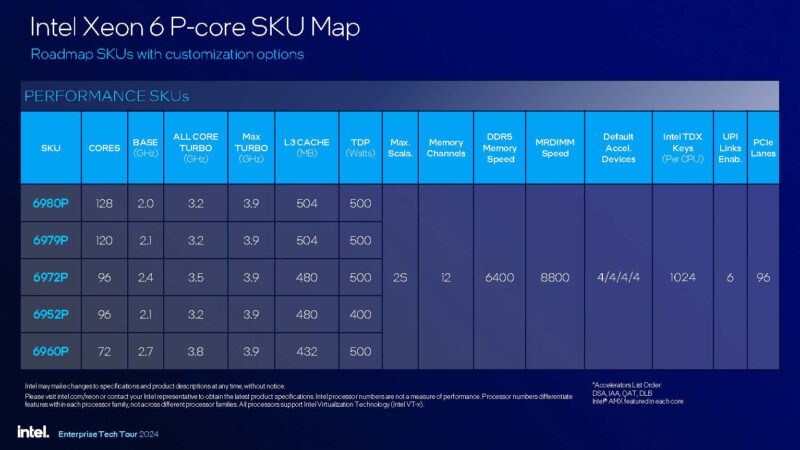

Given the fact that Intel has another socket, and other families of CPUs, the Xeon 6900P series is comprised of only five public SKUs that range from 72 to 128 cores. Only the 128 core part is not a core count total divisible by 3, so we would expect hyper-scalers and others to have custom SKUs based on the 120 core part (Intel Xeon 6979P), but Intel has the 128 core SKU. Also of note, four of the five feature an unapologetically high 500W TDP which is new for CPUs.

Another interesting part is the Intel Xeon 6960P with 72 cores, the same as the CPU portion of a NVIDIA Grace Hopper CPU. Intel is using SMT, so it is technically a 72 core/ 144 thread part, but it also gives Intel around 6MB of L3 cache per core and higher clock speeds. For AI servers, Intel has been winning sockets even without these new monster CPUs, and we will discuss why later in this piece.

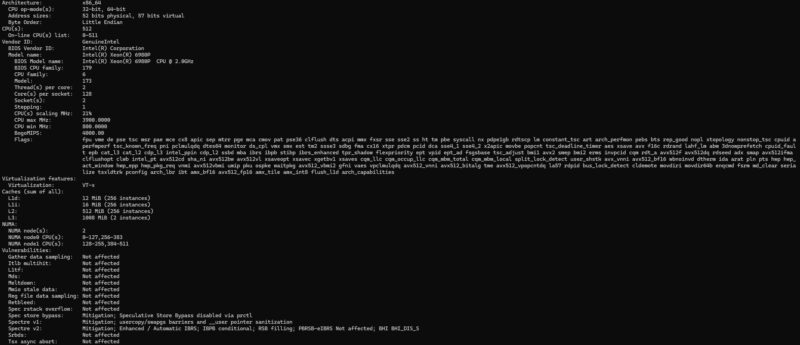

Getting to the chips, here is the lscpu output of the Intel Xeon 6980P, the top-bin 128 core/ 256 thread part in a dual socket configuration. As you can see, we have over 1GB of L3 cache in the system and plenty of cores.

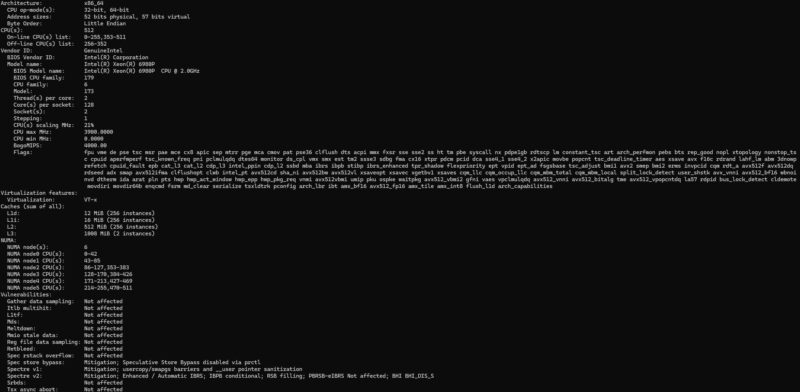

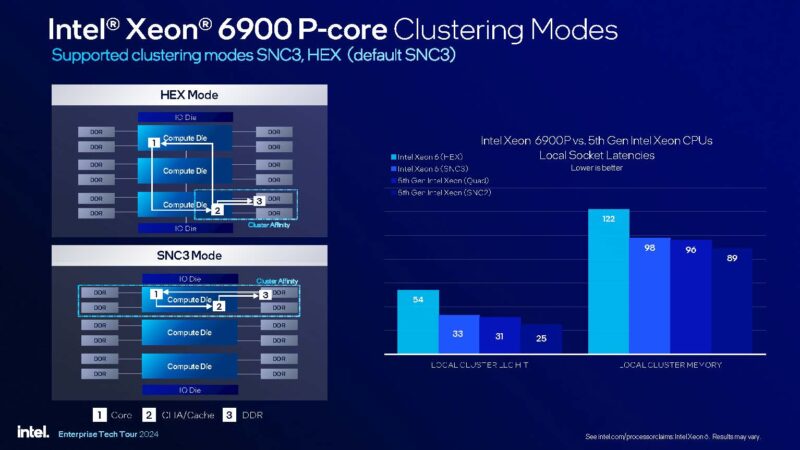

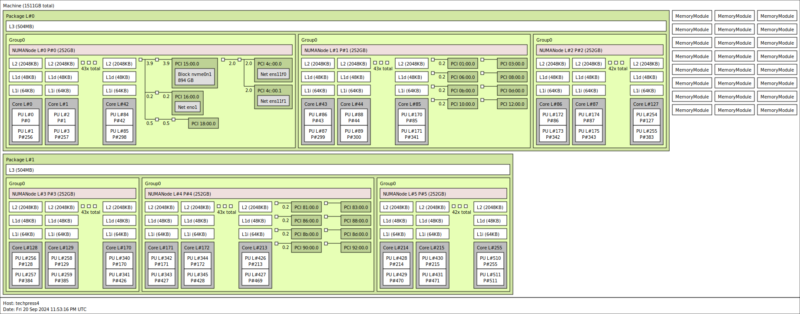

At the same time, we expect many of these systems to be run as three NUMA nodes because of how the silicon is constructed.

Intel keeps its memory controllers on the same physical die or compute tile as its cores. As a result, keeping memory access localized on those tiles can yield better performance.

It also yields a somewhat funky topology since two of the SNC3 NUMA nodes have 43 cores, and one has 42 cores. Intel has a 120 core SKU that might be more popular for both yield and for balance purposes. Still, it would have been cool if Intel used a 3x 43 tile design to make a 129 core CPU just as a marketing SKU to say it has 129 cores, or one more than AMD.

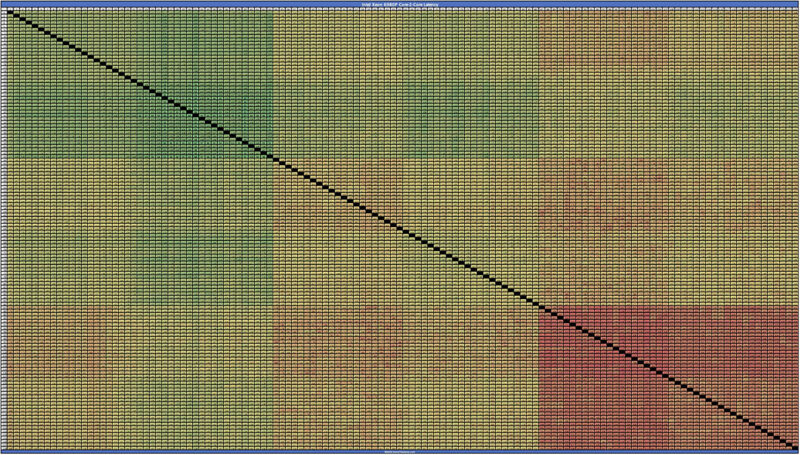

This tiled infrastructure you can easily see when looking at core to core latency charts. As unreadable as this probably looks after being compressed for the web, just know this is the 128 core hyper-threading off version. The 512 thead dual socket version took forever to run but was even more of an eye chart.

The behavior above can be explained by Intel’s design, putting three large compute tiles on a chip along with two I/O dies.

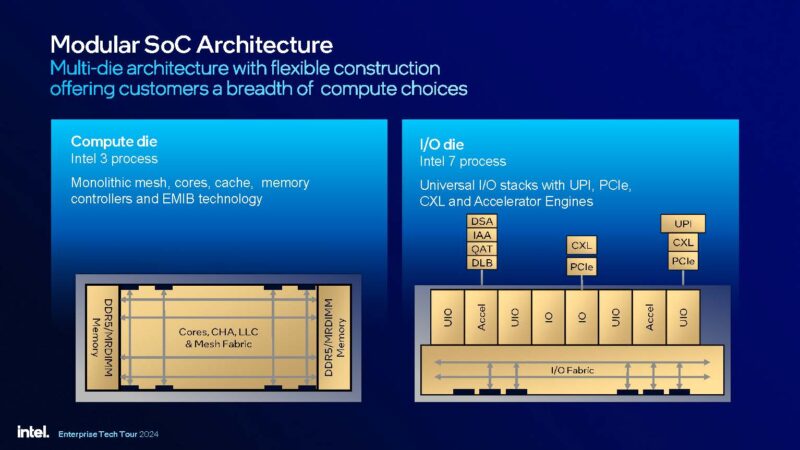

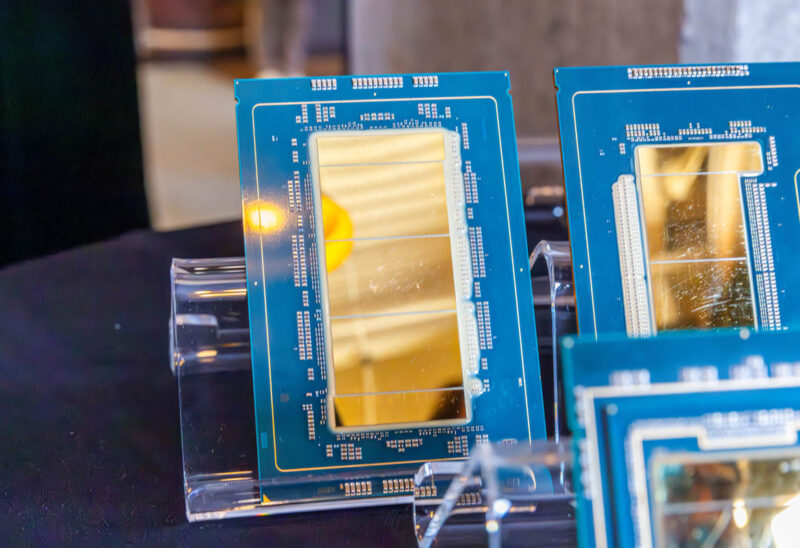

Part of what allows Intel to come back into the orbit of AMD’s top-end parts, and be competitive with AMD’s next-generation Turin is that it it is using new process technology. Intel 3 is being used for the compute die that also has its memory controllers and Intel 7 for the I/O die with the chips UPI, PCIe, and accelerators.

AMD pulled ahead in 2019 with Rome partly by moving to a chiplet design and partly because Intel 10nm was so delayed. We will see more of its chips now that Intel’s process technology is rapidly improving. Intel is bridging chiplets now with more advanced EMIB packaging, which is why its tiles look more tightly packed while AMD’s compute tiles look like their own islands compared to AMD’s I/O dies.

Still, the shift for Intel is very notable in this generation. Instead of only focusing on workloads accelerated by the company’s built-in accelerators, Intel now has a monster chip that can go head-to-head with AMD on raw CPU performance, but then also has its accelerators built-in.

One of Intel’s biggest features, however, is integrating those memory controllers into compute tiles, and then offering very fast memory options, so let us get to that next.

Source: Servethehome.com